LIBRARY

The Data Science of Zen

By Michael SantosIt’s 2022, near the end of the year, and you’re trying to find digital editions of the dharma but need help finding your prized texts. Then, suddenly, you find your saving grace: a website called Zen Marrow! They have every possible text relating to Zen Buddhism you could want there, but there’s just one catch: it could be more organized. Zen Marrow might also be a search engine that provides excerpts from texts based on keyword queries: type in a keyword or phrase and be met with matches of that phrase in scripture. You wanted the entire forest, however, and not just a tree. You realize that when you get your query results printed, in addition to just seeing the excerpt with your keyword(s), you also get a chance to share the section by copy-pasting its URL.

Every excerpt URL has a broad set of characteristics: the link to the website itself, the excerpt number (its ID), and the text it came from (its index). Each section is sorted in order for every excerpt in an index: an ID of 1 is the first chapter, or case, an ID of 2 for the second, etc., until the final excerpt ID is reached. If the entirety of these texts has been uploaded here, then it’s possible to create possibly never-before-seen digital copies of these texts! As for how that could be the case, there’s nothing a little programming can’t solve:

# Import the webdriver and By classes from the selenium module

from selenium import webdriver

from selenium.webdriver.common.by import By

# Initialize an empty string to store the text from the selected nodes

output = ""

# Create a webdriver instance and load the URL

driver = webdriver.Chrome()

# Iterate over the range of IDs from a to z (i.e. 1 & 100)

for i in range(1, 100):

# Construct the URL using string formatting and the current ID

url = f"https://zenmarrow.com/single?id={i}&index=indexgoeshere"

# Load the URL in the webdriver

driver.get(url)

# Wait for the page to fully load

driver.implicitly_wait(10) # seconds

# Select the node with the "case-title" class

case_title = driver.find_element(By.CLASS_NAME, "case-title")

# Select the node with the "case-text" class

case_text = driver.find_element(By.CLASS_NAME, "case-text")

# Get the text of the selected nodes

title = case_title.text

text = case_text.text

# Append the text to the output string, with a line break between the title and text

output += title

output += "\n\n"

output += text

output += "\n\n"

# Close the webdriver

driver.close()

# Write the output string to a text file

with open("text name.txt", "w") as f:

f.write(output)

[DISCLAIMER: web scrape responsibly, please.]

After that, you now have a nice folder of Zen texts at your fingertips; you might as well archive these to save others the trouble and money in the future, anyway.

Of course, that’s different from where the story ends. I realized that I could do a small data science project out of this, so I did. Since we’re dealing with pieces of text here, it seems obvious to perform text analysis on this library, but how? Fear not, for we have four bastions of text analysis that we’ll be using today: word frequency analysis, collocation analysis, theme analysis, and named entity recognition. Here’s a quick rundown of what each of those means:

- Word frequency analysis is a method of analyzing a body of text by counting the number of times each word appears. This can be useful for identifying the most commonly used words in a text and for identifying words that are unusual or unexpected in the context of the text.

- Collocation analysis is a method of analyzing word co-occurrence patterns in a body of text. A collocation is a group of words frequently appearing together, such as “strong coffee” or “heavy rainfall.” Collocation analysis can be used to identify common phrases or idioms in a text and to identify words that are semantically related to one another.

- Theme analysis is a method of identifying and analyzing the major themes or ideas in a body of text. This can be done by frequently identifying keywords or phrases occurring in the text and then categorizing them into themes based on their meanings and relationships to one another.

- Named entity recognition (NER) is a technique in natural language processing that involves identifying and extracting named entities from a body of text. Named entities include proper nouns such as people, organizations, locations, and other entities named or referred to in the text. NER can extract structured information from unstructured text and is often used in tasks such as information extraction and document classification.

Starting from the top, here is the code for each program for each type of text analysis:

frequencies.py:

import os

import string

from collections import Counter

# Set the directory path

directory = "ZenDirectoryGoesHere"

# Initialize a list to store the words from all text files

all_words = []

# Read the list of stop words from its proper location

with open("stop_words.txt", "r") as f:

stop_words = f.read().split()

# Iterate over the files in the Zen directory

for filename in os.listdir(directory):

# Only process text files

if filename.endswith(".txt"):

# Open the text file and read the contents

with open(os.path.join(directory, filename), "r") as f:

for line in f:

# Ignore lines that end in a number, as these usually just contain the title

if not line[-1].isdigit():

# Split the line into a list of words and add them to the list of all words

all_words.extend(word.strip(string.punctuation).lower() for word in line.split() if word.strip(string.punctuation).lower() not in stop_words)

# Count the frequency of each word using the Counter class

word_counts = Counter(all_words)

# Open the output file and write the word frequencies to it

with open("word_frequencies.txt", "w") as f:

for word, count in word_counts.most_common():

f.write(f"{word}: {count}\n")collocations.py:

import os

import re

from collections import Counter

from nltk import collocations

# Set the directory containing the text files

directory = "."

# Initialize a list to store the collocations

collocations_list = []

# Iterate through each text file in the directory

for file in os.listdir(directory):

if file.endswith(".txt"):

# Open the file and read its contents

with open(os.path.join(directory, file), "r") as f:

text = f.read()

# Remove all punctuation and marks from the text

text = re.sub(r'[^\w\s]', '', text)

# Split the text into a list of tokens (words and punctuation)

tokens = text.split()

# Use the NLTK's collocations module to find bigrams in the text

bigram_finder = collocations.BigramCollocationFinder.from_words(tokens)

# Use the bigram finder to generate a list of bigrams and their frequencies

bigrams = bigram_finder.ngram_fd.items()

# Add the bigrams and their frequencies to the collocations list

collocations_list.extend(bigrams)

# Write the collocations to a text file

with open("collocations.txt", "w") as f:

f.write(str(collocations_list))themes.py:

import os

import spacy

# Load a pre-trained natural language processing model using spacy

nlp = spacy.load("en_core_web_sm")

def generate_themes(directory):

themes = {}

for file in os.listdir(directory):

with open(os.path.join(directory, file), "r") as f:

contents = f.read()

# Extract themes from the text using spacy

doc = nlp(contents)

for entity in doc.ents:

theme = entity.text

if theme in themes:

themes[theme] += 1

else:

themes[theme] = 1

return themes

# Call the function and pass the directory path as an argument

themes = generate_themes(".")

# Write the themes and their count to a text file, sorted by count in descending order

with open("themes.txt", "w") as f:

for theme, count in sorted(themes.items(), key=lambda x: x[1], reverse=True):

f.write(f'{theme}: {count}\n')entities.py:

import nltk

import spacy

import os

# Set up NLTK for collocation analysis and theme identification

nltk.download("collocations")

nltk.download("punkt")

nltk.download("stopwords")

# Set up spaCy for named entity recognition

nlp = spacy.load("en_core_web_sm")

# Iterate over the .txt files in the directory

for file in os.listdir("ZenDirectoryGoesHere"):

if file.endswith(".txt"):

# Read the text from the file

with open(file, 'r') as f:

text = f.read()

# Pre-process the text for collocation analysis and theme identification

tokens = nltk.word_tokenize(text)

filtered_tokens = [token for token in tokens if token not in nltk.corpus.stopwords.words("english")]

bigrams = nltk.bigrams(filtered_tokens)

finder = nltk.BigramCollocationFinder.from_words(bigrams)

finder.apply_freq_filter(3)

collocations = finder.nbest(nltk.collocations.BigramAssocMeasures().pmi, 10)

themes = nltk.Text(filtered_tokens).collocations()

# Perform named entity recognition using spaCy

doc = nlp(text)

entities = [(entity.text, entity.label_) for entity in doc.ents]

# Write the results of the analysis to a .txt file

with open("entities.txt", "w") as f:

f.write(str(entities))After we do a little bit of some manual preprocessing and feed our data to matplotlib, word cloud, and a bunch of other libraries, we are granted this gallery of beautiful images:

Looks pretty, but what does it all mean? Let’s go through each image case by case and break it down.

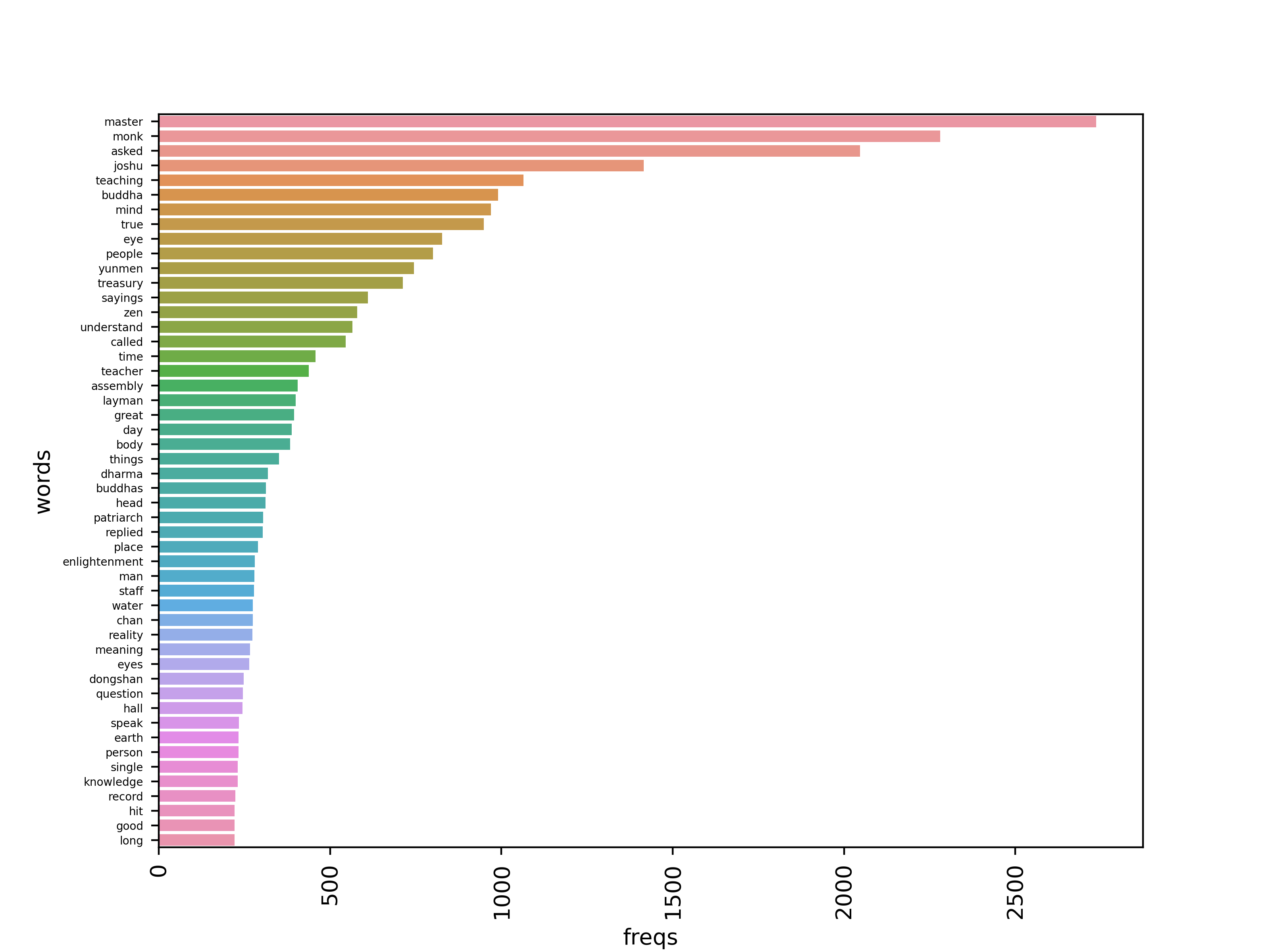

THE WORD FREQUENCY ANALYSIS:

It’s simple enough, the x-axis (freqs, for frequency) represents the number of occurrences of a word labeled on the y-axis. Next up, we have:

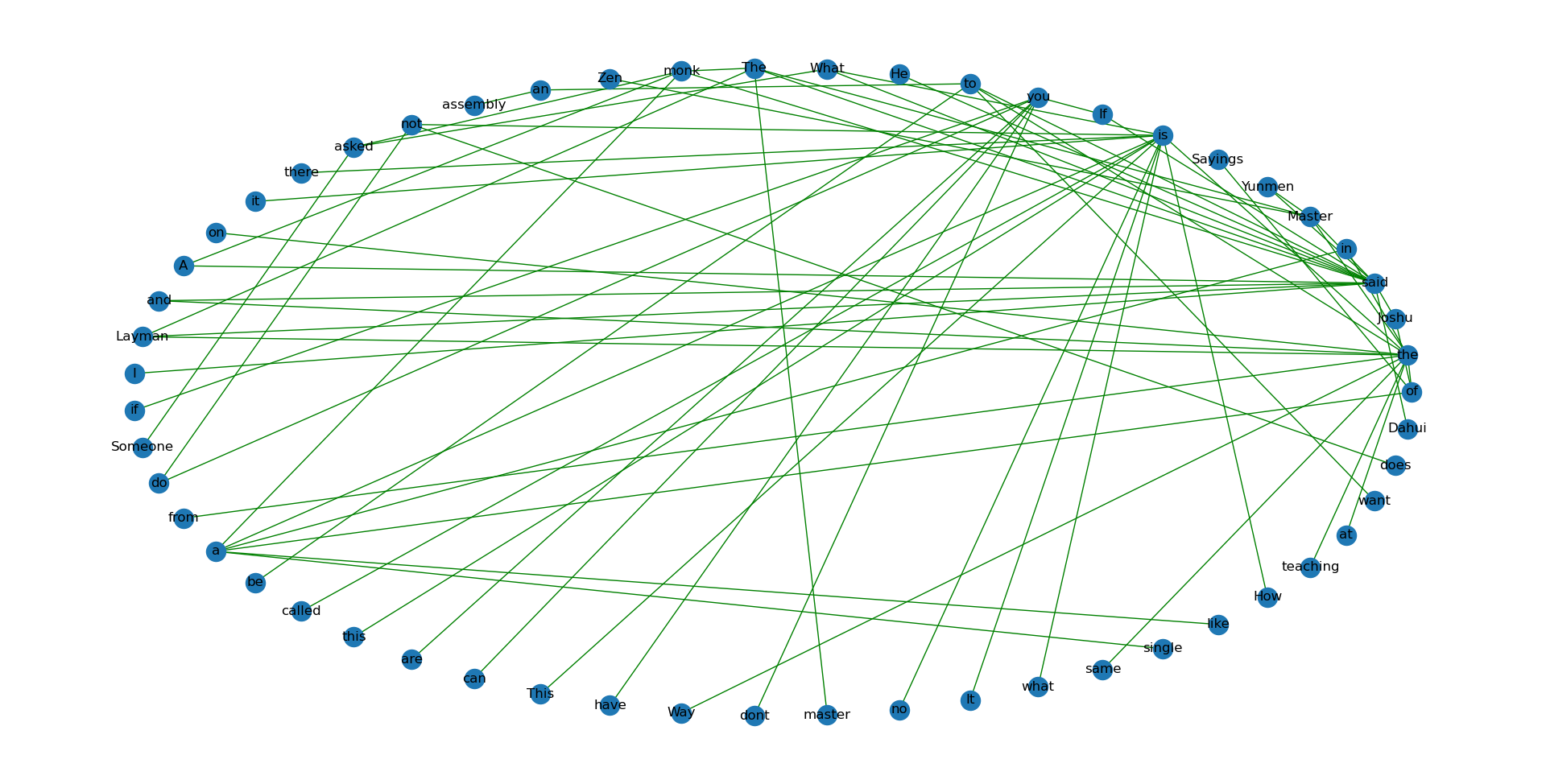

THE COLLOCATION ANALYSIS:

Circularly arranged, every bigram (pair of words) is connected to each other, and if there ever be an occurrence where the same word may be in multiple pairs, multiple arrows express this fact.

THE THEME ANALYSIS:

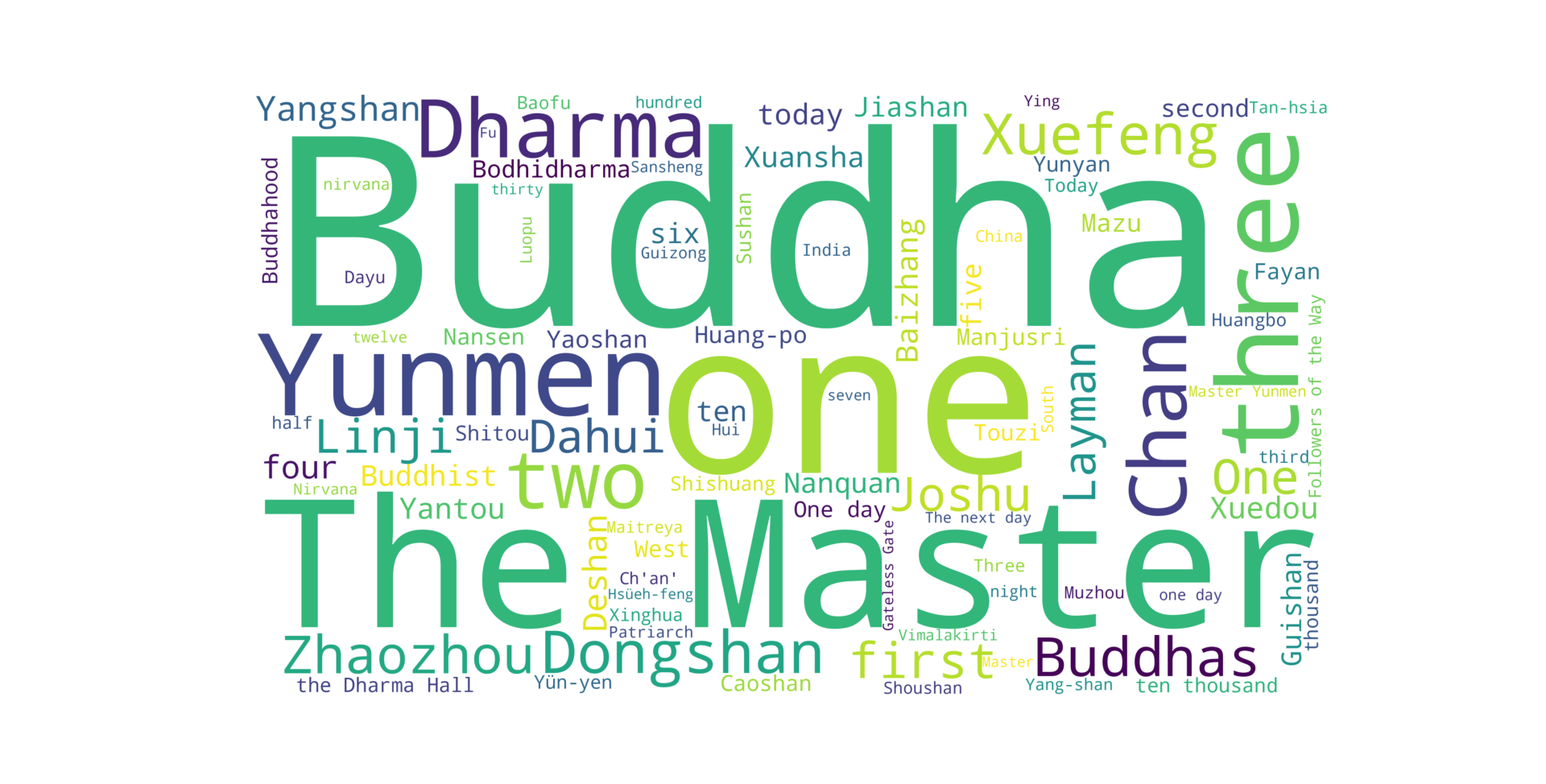

For every theme detected, so too is the number of times that theme appears. The more appearances a theme makes, the bigger the font size that theme will have on a word cloud, so the theme with the highest counts will be displayed in the biggest font in comparison to others, and vice versa for the theme with the least amount of appearances.

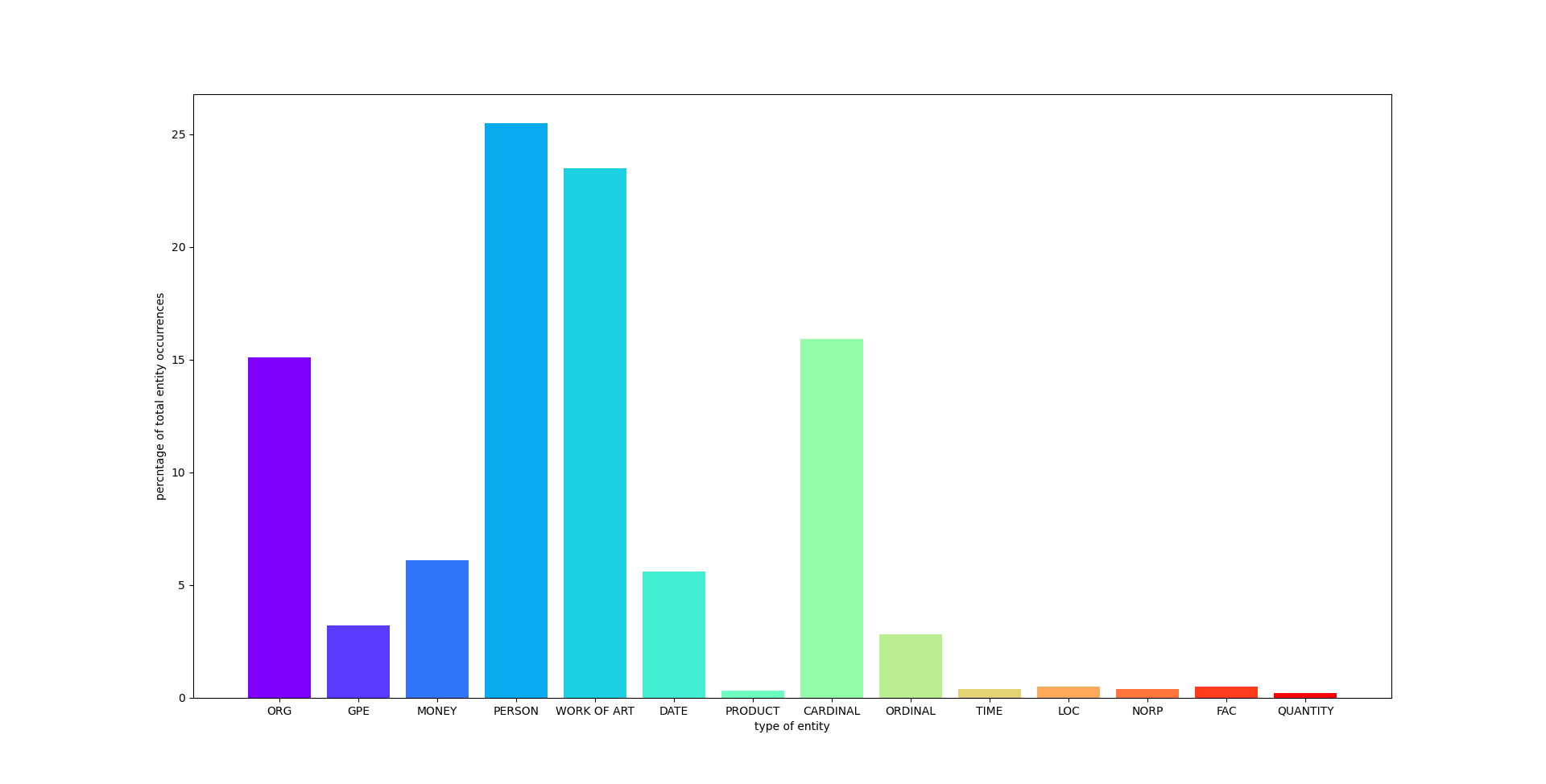

THE NAMED ENTITY RECOGNITION:

For every type of entity present in the text (labeled on the x-axis), that type’s total percentage of entity occurrences is marked on the y-axis. Note that during processing, a lot of would-be people were mislabeled as works of art (i.e., Linji and the “Followers of the Way”), so if that weren’t the case, this chart would’ve been mostly dominated by the “person” entity.

CONCLUSION:

So that’s what that means… but what does that tell us about Zen as a whole? All we know for certain is that the true path to enlightenment involves a lot of scrolling through poorly organized text excerpts on Zen Marrow (no thanks to their zen-tastic user interface)!